The free access to this article was made possible by support from readers like you. Please consider donating any amount to help defray the cost of our operation.

The Mimesis of Difference: A Deleuzian Study of Generative AI in Artistic Production

Jakub Mácha

Abstract

This study uses the traditional concept of mimesis, reframed by Deleuze as a repetition of difference, to investigate art created by large language models (LLMs) or generative pretrained transformers (GPTs). The current rise of artificial intelligence (AI) is intensifying the mimetic repetition of the different. An AI system repeats carefully selected elements from its extensive training dataset. Rearranging these elements introduces differences in the mimetic repetition. Since the training dataset is updated over longer time frames, it is analogous to a static Platonic universe. Once AI can update its static elements with each repetition, it will become the true Dionysian machine, capable of repeating simulacra. Nonetheless, I argue that human agency cannot be entirely eliminated. Large language models can be considered the most effective technological tool to assist human artists in creating their artworks. Therefore, it is preferable to view AI artworks as AI-assisted, but human-created.

Key Words

art; difference; Dionysian machine; parameter tensors; GPT; inverted Platonism; LLM; mimesis; repetition

1. Introduction

This article begins by considering the concept of mimesis as applied to art. Although I acknowledge the influence of ancient sources on our understandings of this concept, my primary focus is on Gilles Deleuze’s reinterpretation of mimesis as the repetition of difference. The main idea that I seek to explore here is that modern artificial intelligence (AI) systems, such as large language models (LLMs), engage in similar repetitions during their computations. In other words, AI systems are capable of performing mimesis, as (re)defined by Deleuze. This leads to the central question: Can LLMs create art, or can their outputs be considered artworks? I conclude that, given their current architecture, LLMs alone cannot produce artworks. However, there is the potential for future LLMs to do so.

Section 2 elucidates the concept of mimesis from a historical perspective, covering the period from Plato to the present day (2.a), Deleuze’s reinterpretation of mimesis as the repetition of difference (2.b), and the interrelation between mimesis and the notion of the death of the author (2.c). Section 3 introduces the concept of AI-generated art (3.a) and gives a detailed account of the functioning of LLMs (3.b). The main argument is presented in section 4. First, in subsection 4.a, I argue that in the course of their computations, LLMs perform a repetition of difference, that is, mimesis in Deleuze’s sense. Subsection 4.b then looks at whether LLMs can dynamize their static elements and become true Dionysian machines. Subsections 4.c and 4.d present illustrative examples of these concepts. The first example, Andy Warhol’s Marilyn Diptych, is a work derived from conventional artistic forms. The second, Anna Ridler’s Synthetic Iris Dataset, represents an instance of LLM-generated art. The concluding section 5 discusses the potential implications of the advent of generative AI for the art world and the field of aesthetics, drawing parallels to the emergence of photography.

2. Mimesis

2.a. Historical perspective

The concept of mimesis is deeply rooted in the Western aesthetic and philosophical tradition. It is a multifaceted idea with a rich historical trajectory that spans from ancient Greece to contemporary art and theory. Traditionally, mimesis is understood as imitation, replication, or representation of nature. Yet what imitation is, and what kind of nature is supposed to be imitated, has never been easy to determine. Plato views mimesis critically as the imitation of the physical world, which itself is an imperfect copy of the eternal world of Forms, making art twice removed from truth and thus a source of ethical disquiet. He acknowledges its educational use in fostering virtues among the youth in his ideal state. Aristotle, on the other hand, sees mimesis as the representation of action in art, valuing its ability to depict and even idealize reality. In his view, the goal of art, especially tragedy, is or should be to present moral exemplars. Both thinkers distinguish between good and bad mimesis: Plato values imitations of eternal Forms, while Aristotle focuses on the transformative nature of the mimetic process. In short, Plato cares about what is transformed, whereas Aristotle cares about how it is transformed.

In its modern form, mimesis encompasses imitations of social realities, including aspects of the art world. The evolution of aesthetic theory and art styles, particularly since the dawn of modernism, has broadened our understanding of mimesis. Through their embrace of abstraction and conceptual art, modernism and postmodernism have fostered a reinterpretation of mimesis. In its modern and postmodern forms, mimesis becomes a process through which art reflects, distorts, and reimagines reality, playing with the boundaries between the real and the created.[1] Within this trajectory, we can discern two tendencies: Mimesis is emancipated from its dependence on the external object of reference (external nature), and the distinction between positive and negative mimesis is called into question.

2.b. Mimesis in Deleuze

These two tendencies converge in the work of Gilles Deleuze, whose conception of mimesis and repetition revolutionizes traditional understandings of artistic creation and interpretation. His ideas challenge the notion that art merely imitates or reflects the world and instead present art as a dynamic process of inventive repetition. This perspective reshapes how we perceive the role of the artist, the nature of artistic expression, and the relationship between art and reality.

Deleuze’s philosophical project, notably in works like Difference and Repetition and The Logic of Sense,[2] and his exploration of the concept of the simulacrum represent a significant departure from traditional Platonic metaphysics, leading to what is often described as an inverted Platonism. His innovative interpretation reimagines the hierarchy and value of forms, copies, and simulacra, challenging Plato’s conception of imitation and reality. A simulacrum is a false copy that misrepresents the original Form—a deceptive imitation that lacks the truth or essence of the model it seeks to replicate. Plato posits a clear hierarchy of being in which the Form is the highest level of reality, followed by material instances of that Form and, finally, imitations or representations of those instances, which are considered the least real.

Deleuze inverts this hierarchy by highlighting the simulacrum. Rather than seeing it as a degraded copy, he redefines simulacra as embodiments of difference and uniqueness. Simulacra do not derive their existence from an eternal, preexisting model; rather, they stand on their own as singular realities that repeat and reimagine a preceding member of a series, hence challenging the notion of originality and the primacy of Forms. This reconceptualization disrupts the traditional binary of model and copy, suggesting that reality is constituted by a multiplicity of differential expressions rather than a hierarchy of Forms. It celebrates the creative and generative power of difference and variation. Simulacra are not mere imitations, but constitute a productive and dynamic reality.

The concept of the Dionysian machine[3] is less directly articulated by Deleuze, but can be understood in the context of his philosophy as part of the overall machinery of difference and repetition that underlies reality. The Dionysian, a term borrowed from Nietzsche, is a chaotic, creative, and affirmative force that stands in contrast to the static and ordered Apollonian. In relation to Deleuze’s inverted Platonism, the Dionysian machine may be seen as a metaphor for the process that generates simulacra, not through brute imitation but through the affirmative production of differences, that is, creative imitation. It embodies a force that dismantles hierarchies and fixed identities, celebrating the flux, becoming, and diversity of the real. The Dionysian aspect emphasizes the joyful and libidinal energies that fuel the creation and proliferation of simulacra, emphasizing the processual and transformative aspects of reality over static Forms or essences.

Deleuze’s inversion of Platonism, with the central place it gives to the concepts of simulacrum and the Dionysian, represents a radical rethinking of metaphysics and ontology. By dethroning the primacy of original Forms and embracing the generative potential of difference, Deleuze proposes a fluid and dynamic conception of reality. His philosophy celebrates the power of the simulacrum and the creative chaos of the Dionysian as fundamental to understanding and experiencing the world, marking a departure from the search for immutable essences and instead focusing on the perpetual becoming and transformation of life.

If Plato’s theory, which posits Forms as the ultimate reality, is rejected, mimesis loses its hierarchical structure of good and bad imitations and good and bad copies. If simulacra are self-sufficient singularities rather than derivative copies, mimesis becomes a relation of difference between simulacra rather than a measure of fidelity to an ideal Form. Furthermore, simulacra do not refer to any external reality—they are themselves the ultimate reality. This is how the two tendencies described above find their expression in Deleuze’s framework.

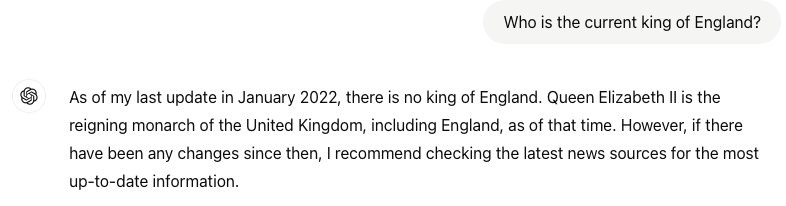

These considerations are directly relevant to art. According to Deleuze, “art does not imitate, above all because it repeats; it repeats all the repetitions, by virtue of an internal power (an imitation is a copy, but art is simulation, it reverses copies into simulacra).”[4] While it may seem like there is plain, bare repetition in art such as in ornamental patterns, musical motifs, or refrains (in short: geometrical forms), Deleuze believes that this repetition is displaced by respect to another repetition of simulacra—a phenomenon he calls “clothed repetition.” He cites Warhol’s Pop Art as an example of this concept. Warhol “pushed the copy, copy of the copy, etc., to that extreme point at which it reverses and becomes a simulacrum.”[5] Warhol’s machine-made portraits, which are almost indistinguishable from one another yet still different, perfectly exemplify simulacra in art.

2.c. Mimesis vs. the author’s expression

Deleuze’s ideas about art are closely tied to life as the primary creative force. In contrast, AI tools are lifeless machines. To preserve life as the source of creativity, we should turn to thinking about art from the perspective of the viewer or recipient. This involves a partial separation of the connection between the artist and the artwork. Moreover, independence from an external reference point also means independence from the author.

According to Roland Barthes’s famous structuralist notion of the “death of the author,” the author’s intentions, background, or context should not limit the interpretation of a text. Instead, meaning emerges from the interaction between the text and its reader. This idea echoes Plato’s critique in the Phaedrus, in which he likens written words to paintings that seem alive but cannot interact or defend themselves without their creator. In contrast, Hegel views art as reliant on its audience for significance, marking a shift in emphasis from the creator’s intent to the spectator’s interpretation. Both Plato and Hegel, like Barthes, suggest that art and text possess value and meaning not inherently but through the engagement and interpretation of their audience. This conception focuses less on the artist or creator and more on the interpretive freedom allowed by the artwork and the role of the audience in bringing art to life.

Approaches of this sort assume particular importance when we consider works of art produced by nonhuman agents (or apparent agents) like AI. Many arguments against AI-generated art express concerns about artists and their human characteristics such as experience, emotions, personhood, and intellectual faculties. But if the role of the artist is diminished, these objections lose their relevance.[6]

3. Art and artificial intelligence

3.a. AI-generated art

Can AI create or generate art? This question already suggests a certain bias, because by centering the role of the artist, it suggests that it is the artist (and only the artist) that can give an artifact its artistic status. We should ask instead: Can things produced by AI be considered art?[7] This question presupposes a much broader notion of what art is. We cannot hope to address these issues in detail within the scope of this essay, nor to comprehensively survey the vast fields of AI-based approaches to art and machine or computational creativity.[8] Recent literature has tended to focus on art created primarily by human agents, but with substantial assistance from AI tools,[9] on how the nature of artworks is shifting from artifacts to processes and infrastructures,[10] and on evaluating AI with respect to various notions of creativity.[11] However, here I will focus more narrowly on the status of AI-generated artworks within the mimetic conception of art, while within the field of AI, I will focus on large language models (LLMs) and especially generative pretrained transformers (GPTs) such as ChatGPT, which have achieved great prominence in recent years. For the sake of simplicity, the discussion will concentrate solely on textual GPTs and leave out transformers that operate in other domains (such as DALL.E, which transforms text into images). However, the implications of the arguments presented here are not confined to textual art.

Mark Coeckelbergh considers AI-generated art through the prism of the mimetic theory of art. He makes two key points:

First, if we assume an expressivist view, then it seems not possible for machines to engage in true artistic creation, since this presupposes some “inner” state or inner self, and as far as we know, machines do not have such an inner state.

[…]

Second, if we assume a mimesis conception of artistic process, however, the machine has at least a chance to be included in the domain of artistic work, since the only thing that matters is imitation. Unless one can come up with a different definition of mimesis, it seems that this is simply what mimesis means. Hence, if a machine is capable of imitating whatever it “sees,” then according to this criterion, it seems that what it does qualifies as artistic creation.[12]

According to the expressivist view, art expresses the inner subjective state of the artist. I agree with Coeckelbergh that machines lack inner subjective states. (This is a much-debated claim, however, and defending it would be beyond the scope of this article, so I shall not attempt to do so here.) If machines do not have anything to express, then according to the expressivist account of art they cannot produce art.

Things are quite different with the mimetic conception of art. We just can assume that AI machines are capable of imitating and transforming their inputs. However, as noted above, ever since Aristotle, mimesis has not referred merely to crude imitation. As Coeckelbergh suggests, “a different definition of mimesis” must be in place. Earlier, I suggested that by following Deleuze and reinterpreting mimesis as a repetition of difference, LLMs might one day gain the ability to generate simulacra and consequently create art. This assertion is made with the caveat “one day,” since in their present state LLMs do not yet fully embody the Dionysian machines envisaged by Deleuze.

3.b. Large language models and GPTs in detail

Let us look at LLMs and, more specifically, GPTs in more detail.[13] LLMs and GPTs utilize an intricate process of analyzing and generating text that is fundamentally grounded in their representations of the massive datasets they are trained on. These datasets are usually scraped from public online sources such as Wikipedia, news articles, and books. The crucial point is that data can be used for training regardless of their truthfulness, reference to actual objects, or even meaningfulness. Both false and true data can be equally useful; fiction can be just as valuable as brute facts, and a coherently argued essay can be as good as random nonsense from the internet.

During the training process, the training data is transformed into parameter tensors, also known as model weights in the deep neural network that makes up the LLM. First, the language data is transformed into a matrix of numbers via the embedding matrix. The embedding space is a high-dimensional space in which words, phrases, or even whole sentences are mapped to vectors of real numbers.[14] This method allows semantic and syntactic information about the language elements they represent to be encapsulated. Essentially, the parameter tensors serve as the model’s understanding of the language, with similar meanings being placed closer together in the vector space.[15] This enables the model to infer context, make predictions, and generate coherent responses based on the input it receives. Hence, the model weights represent the language rather than the world. To put it another way, LLMs are, as the name suggests, models of language, not models of the world or of true facts.

The training process involves adjusting the weights of the deep neural network through exposure to vast amounts of text, allowing the model to refine its representations and improve its performance. Another key point is that after this initial training adjustment, subsequent fine-tuning is not a fully automated process. A method known as “reinforcement learning with human feedback” (RLHF) is critical for fine-tuning LLMs. Humans annotate text and label examples, evaluate model outputs during and after fine-tuning to measure performance on key metrics, and identify and correct errors. Their feedback enables models to learn human preferences and expectations. All of these complex processes help us capture the subtle relationships between various language components in the training data and their representation in the parameter tensors of the deep neural network.

As we will recall, GPT stands for “generative pretrained transformer.” Being “pretrained” means the model’s weights (parameter tensors) are fixed after initial training. As of April 2024, the datasets for the most widely used models are at least a year old; for instance, GPT-3 was trained on data up to January 2022, and Claude 2 used data available up until April 2023. We can therefore describe LLMs as inherently static language models.

Update rates for major revisions are provided by the model itself or the company that runs it. We can never be sure when the model is being fine-tuned or which parts of the systems are being adjusted on a daily basis.[16] In addition, the hidden instructions given to the model before our query, along with any filtering that occurs before reaching the actual model, may change more frequently than we realize. If this were the case, the path to AI systems as Dionysian machines would be more straightforward.

Let us focus on the transformer core of GPTs and its key component: the attention mechanism.[17] The attention mechanism is intricately intertwined with the model weights, forming the crux of its advanced processing capabilities. In essence, when input text is fed into a GPT model, each word is initially transformed into a dense vector representation via the parameter tensors. These model weights serve as a comprehensive distillation of the word’s semantic properties. The attention mechanism then comes into play and uses vector multiplication to calculate attention scores, which quantify the relevance of all other words in the context for predicting a specific word. This process involves multiplying the query vector (associated with the word being predicted) by key vectors (associated with contextual words) to determine their alignment within the vector space. Consequently, the model dynamically adjusts its focus, tuning into the most pertinent segments of the input data. Within this process, there is a parameter, referred to as ‘temperature,’ that influences the randomness or predictability of the output generated by the model.[18] Specifically, it adjusts the distribution of probability over the set of possible output tokens (for example, words or characters) given a certain input. The resultant weighted sum, synthesized through these multiplications, feeds into the subsequent layers, culminating in a model output that is remarkably context-aware and linguistically coherent. Through this sophisticated orchestration of the model weights, GPTs achieve their trademark fluency and adaptability in language tasks.

4. Deleuzian perspective

4.a. Clothed repetition

I suggest interpreting these matrix and vector calculations as mimetic repetitions in Deleuze’s sense, that is, as repetitions of simulacra. The input vector representing the input text is gradually transformed into a probability distribution that makes it possible to predict the most likely follow-up word. Deleuze focused on differential calculus, whereas large language models (LLMs) rely on linear algebra—a distinct mathematical discipline involving linear spaces. However, vector and matrix values are real numbers that can be taken as intensities, again in Deleuze’s sense, that are gradually transformed during the calculations. What matters is that LLMs express certain kinds of “problems” and are designed to calculate “solutions” to those problems. Accordingly, Deleuze maintains that “the differences in kind between differential calculus and other instruments [are] merely secondary.”[19] Adjustments to the parameters of matrices and vectors during the transformation are just differentials representing infinitesimal changes. Recontextualized in this way, the following account of calculations by Deleuze describes precisely what GPTs do:

There is an iteration in calculus just as there is a repetition in problems which reproduces that of the questions or the imperatives from which it proceeds. Here again, however, it is not an ordinary repetition. Ordinary repetition is prolongation, continuation or that length of time which is stretched into duration: bare repetition […]. On the contrary, what defines the extraordinary power of that clothed repetition more profound than bare repetition is the reprise of singularities by one another, the condensation of singularities one into another, as much in the same problem or Idea as between one problem and another or from one Idea to another. Repetition is this emission of singularities, always with an echo or resonance which makes each the double of the other, or each constellation the redistribution of another.[20]

As noted above, Deleuze distinguishes a bare repetition of the same from a clothed repetition of the different that aligns with the two kinds of mimesis described earlier: negative and positive.[21] In Deleuze’s delineation of clothed repetition, we can identify key components of LLMs’ transformation. At the beginning, there is a question or imperative, as each LLM transformation requires user input. High-dimensional tensors are singularities that are emitted and condensed into one another by the attention mechanism. In a series of transformations, each vector, matrix, or tensor echoes or resonates with its predecessors; it is a redistribution of its predecessors—up to the initial input and the model weights.

We can now understand how LLMs work in relation to Deleuze’s concept of inverted Platonism, which involves the world of simulacra and Dionysian machines. Inverted Platonism means that there are no metaphysically elevated Forms that serve as models for physical things. Instead, every singular thing or occurrence has the potential to be a model of another occurrence. One thing can simulate another, and in this sense, they are both simulacra. The model and the modeled object are of equal metaphysical status, and they are all in a constant state of flux.

The question is whether LLMs are true Dionysian machines with exclusively dynamic elements. The input text, which is tokenized and transformed into an embedding vector, constantly changes in the course of the transformations. Nevertheless, one element remains constant: the model weights, represented mathematically as parameter tensors; these constitute a linguistic model of the training data. Hence, we could say that the model weights serve a role analogous to the Platonic Forms because the model determines how the input vector is transformed into the output vector, though it does not completely determine it due to the temperature, which adds an element of randomness. The model weights (parameter tensors) are the model of the whole language and hence do not correspond to a single Form, but rather to a totality of all Forms—to the Platonic heaven, so to speak. Given the fixed nature of the model weights, we can infer that GPTs, as they function today, more closely align with traditional Platonism and are thus not Dionysian machines. This conclusion does not necessarily mean that current LLMs cannot create art, only that they do not engage in creative mimesis as defined in Deleuze’s account of difference.

4.b. Might future large language models be Dionysian machines?

It would be possible to conclude the discussion here. But I believe there is value in considering whether LLMs could, in the foreseeable future, evolve into Dionysian machines. Current LLMs release major updates of their models, including full retraining, significant architectural changes, enhancements, and major new features, every year or two. This is the pattern we can observe with the major models from organizations such as OpenAI (GPT versions) and Google’s BERT and its successors. Moore’s Law states that technological development progresses exponentially, leading to more frequent updates in the future, a trend we can already observe today. If models are currently updated once a year, they could soon be updated once a day. However, in order to be true Dionysian machines, they would need to update the parameter tensors dynamically with each prompt.[22] There are currently techniques, like adaptive learning or lifelong learning, where a model continues to learn and revise its parameters post-deployment based on new data. This is closer to the concept of dynamically adjusting parameter tensors, although practically implementing this at scale with current technologies and maintaining coherence and accuracy remains challenging. Moreover, by contrast with how model updates currently work, these sorts of instant updates cannot involve human feedback if they are to constitute a Dionysian machine.

The model weights are, as it were, the main element steering all the transformations. Let us turn, for the last time, to Deleuze and ask whether we can find an analogous element in Dionysian machines. The model weights are identical elements in the sense that they guarantee the identity of the whole transformation process. We cannot expect to find any such identical element in Deleuze. However, there are several differential elements that may fit this role: the dark precursor and the aleatory point (also called the still intact phallus and the castrated phallus in The Logic of Sense). In short, the former accounts for the ultimate difference of all differences, whereas the latter is responsible for introducing chance into the system.

The dark precursor refers to the entity that mediates the differential elements, disparities, or heterogeneous terms that participate in the process of becoming. In simpler terms, it is the unseen mediator that allows different entities to communicate or interact without being a part of the communication. In the case of infinitesimal calculus, the precursor is the differential of different series; it is always escaping to a higher level, which is why it is labeled “dark.” In the case of textual systems, the linguistic precursor appears as a nonsensical (esoteric, poetic, portmanteau) word. Deleuze relates repetition to the power of language, which relies on an “idea of poetry.” He expands on this point as follows:

In order for a real poem to emerge, we must “identify” the dark precursor and confer upon it at least a nominal identity—in short, we must provide the resonance with a body; then, as in a song, the differenciated series are organised into couplets or verses, while the precursor is incarnated in an antiphon or chorus. The couplets turn around the chorus.[23]

Deleuze developed this concept further in his later works, where he speaks of a refrain or ritornello. The idea is that what may seem like a bare repetition within a piece of art, especially a poem, in fact resonates with the dark precursor. Moreover, this is the fundamental rationale behind all the different instances of repetition in art. Here, mimesis reaches its ultimate level and, at the same time, returns to its origins. What was first a plain, unimaginative imitation of that which was seen in nature reveals itself as an attempt to capture the intangible essence, the invisible remainder of all repetitions of differences.

4.c. Example: Warhol’s Marylin Diptych

Before we look at how this idea might bear on LLMs, let us consider an example already mentioned earlier: Warhol’s serial portraits. Here is his Marylin Diptych from 1962:

Deleuze does not specifically mention this diptych. However, it exemplifies his description of “Warhol’s remarkable ‘serial’ series, in which all the repetitions of habit, memory and death are conjugated.”[25] The two-part painting presents a series of repetitions that are interrelated and displaced in various ways. They mimic Marilyn Monroe’s face in a semi-photorealistic fashion. These repetitions are displaced in the bare repetition of the same face, which is clothed in different shadings, and the bare repetition, in turn, is displaced in the series of paintings. The repetitions resonate with various kinds of repetitions, not only from the history of art but also from media and advertising. And the right-hand half of the painting, in particular, also resonates with the course of Monroe’s life and with her death in 1962, the same year the painting was produced. As we can see, bare repetition is not abandoned in favor of clothed repetition. Nor is plain, unimaginative mimesis replaced with creative transfiguration. Moreover, the creative process of repetition is not separated from the final artwork. There is no definitive element, as the artwork can be any part of the series or the entire series itself. Each product is temporary and subject to repetition in the eternal cycle of simulacra.

Let us also briefly consider the aleatory point: the transcendental other where everything becomes ungrounded.[26] Deleuze frames it as the ultimate throw of a dice: “An aleatory point is displaced through all the points on the dice, as though one time for all times.”[27] The aleatory point assumes, at the same time, the role of the subject, although displaced. It is the dissolved self, a “child player” that has entirely forgotten its past. If we want to identify the artist, this is where we should look.

These Deleuzean considerations apply to art in general, but they especially are relevant to LLM-generated art. To begin with, we must identify what serves the function of dark precursor in such art. The parameter tensors may seem to be the obvious candidate, but the tensor is a large, static object, whereas the precursor is an infinitesimally small element. If we allow the tensors to evolve over time, there must be general principles governing this evolution. However, these principles are static, meaning they themselves do not change over time. To make them dynamic, we can introduce another layer of principles that govern their evolution. But again, these higher-level principles will also be static. To make them dynamic, we would need to introduce yet another layer of principles, and so on. We can see that the static principle of evolution, that is, of differential change, always escapes to a higher level. The key idea is that a sequence of escapes from the originally static parameter tensor acts as the dark precursor. The objective of art generated by LLMs is to capture and express this dark precursor by aligning with the dynamics of the parameter tensor.

Two already-discussed features of LLMs could correspond to the aleatory point: temperature, which introduces the element of chance, and human fine-tuning, which necessarily involves a subject. As LLMs currently function, these are distinct features. However, in true Dionysian machines, they must be united in a single symbolic image. It is not difficult to imagine that human fine-tuning would introduce an element of randomness. What is more difficult to conceive is how the introduction of chance (temperature) could be synthesized into a symbolic image of the author, the artificial artist.

This may be the furthest we can take our inquiry at this moment. A technology that could completely dynamize the model weights (parameter tensors) does not currently exist. However, as mentioned earlier, we do not fully understand how AI companies operate or which aspects of their models are updated dynamically. That said, it is important to note that such a technology is not mere fiction; it is within our grasp.[28]

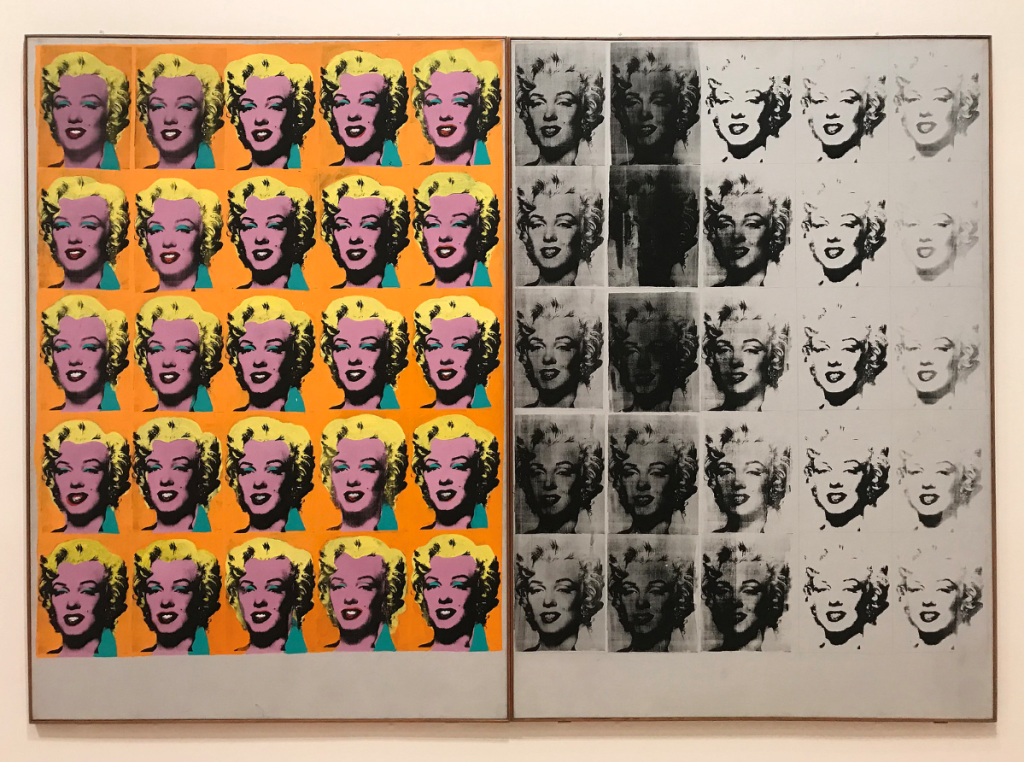

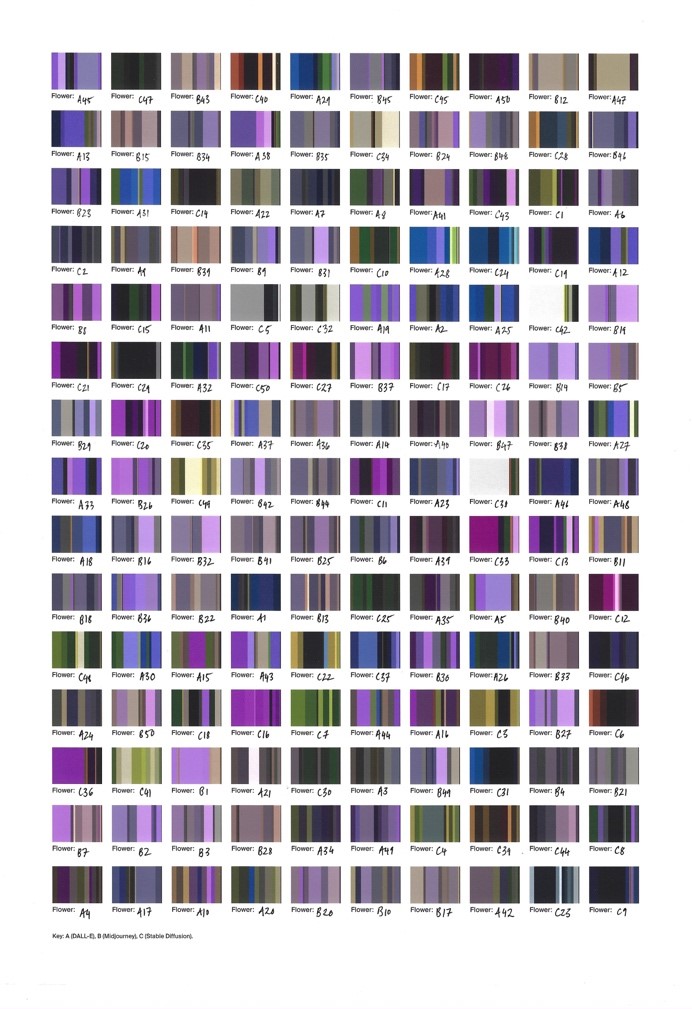

4.d. Example: Anna Ridler’s Synthetic Iris Dataset

Despite being a relatively new technology, GPTs have already made their presence felt in the world of art. Anna Ridler’s Synthetic Iris Dataset (2023) is a prime example of how GPT principles can be used to create thought-provoking works of art. By generating 150 unique flowers and pairing them with interpretations offered by a computer vision API, Ridler was able to reveal cultural and technological codes that would have otherwise gone unnoticed. Drawing inspiration from Ronald Fisher’s dataset, Ridler employed language to uncover hidden meanings in words like ‘iris’ and ‘violet’ that can refer to both flowers and colors. This showcases how GPT technology can be used to bring a new, previously unachievable level of complexity and richness to art.

It is notable that although this artwork was produced by an AI system, we still consider Anna Ridler the sole artist. The original artistic idea is Ridler’s—that is, it originated in her mind—whereas the LLM can be seen as a tool used to implement this idea. In other words, the computation was initiated by the artist’s input, and the artist chose the displayed artwork from among numerous results of that computation, many of which were deemed unsuitable. This is another instance of human-based fine-tuning.

This work of art does not dynamize the model weights; the way current LLMs work does not allow that. However, it does refer to a tiny part of the weights, namely the meanings of the words ‘iris’ and ‘violet.’ The dark precursor is located in the difference between the meanings of these words as embedded in the parameter tensors and their meanings as uncovered by this work of art. The path toward AIs as true Dionysian machines can be opened up through LLMs’ ability to dynamically incorporate newly uncovered meanings of this kind into their model weights. And after each update, the whole mimetic process can be repeated.

5. Final remarks

Traditionally, a variety of concepts—including expression, authorial intention, play, idea, and aura—have been employed to analyze aesthetic experiences of or with art. Mimesis belongs on this list as well. The main point of the present article is that the concept of mimesis, reframed as repetition in Deleuze’s sense, can help us to understand the distinctive qualities of large language model generated art.

It should be stressed that AI-generated art is not wholly self-sufficient, insofar as it cannot come into existence or be appreciated without human involvement. The functionality of contemporary LLMs is contingent upon human input and feedback, which are essential to their operational processes—even if they did come to more closely resemble true Dionysian machines. Additionally, human feedback can take the form of selecting a suitable artwork from a vast array of often nonsensical AI outputs. While it is possible to speculate about the future of AI technologies, including the exponential increase in their computational power or the paradigm shift that may result from the advent of quantum computers, it is unlikely that any of these developments will eradicate human agency entirely. The author may be dead, and AI may reassert the author’s death, but this serves to emphasize that the author’s presence or identity does not affect the reader’s or viewer’s appreciation of the artwork. Moreover, if we turn our attention to the reader or viewer rather than the author or artist, there is no possibility that an AI could fulfill the former role, as it inherently requires a subject capable of appreciating art.

Large language models and GPTs can be seen as advanced tools that help artists to produce works of art.[30] If we look at the history of art, we will see that employing the best available technologies as tools has long been a common practice. Time and again, these technologies have transformed the way art is created. We might think, for instance, of casting bronze sculptures versus replicating them with pointing machines. And then there was the invention of printing, followed later on by painter workshops and the camera obscura. LLMs belong to this lineage.

We can draw parallels between the invention of photography in the nineteenth century and the development of current AI systems in terms of their impact on the art world and the study of aesthetics. The invention of photography sparked both skepticism and excitement within the art world. Traditional artists and critics initially viewed it as a mechanical process that lacked the creativity of painting, and many saw it as a threat to established art forms, particularly portraiture and academic painting. Compared with painting, photography was faster, cheaper, and more precise. The need for human involvement was reduced to setting up the camera, taking the picture, and then processing the negatives in the darkroom. However, some artists embraced photography, using it as a reference tool or experimenting with its possibilities. The medium also democratized art by making visual imagery more accessible to the public, challenging art’s exclusivity. Photography’s mimetic precision in capturing reality inspired new movements such as Impressionism and Cubism, as artists moved toward abstraction and symbolism. Over time, photography gained recognition as a legitimate art form.[31]

Photography led to the perfection of mimesis, understood as the imitation of external reality. The main idea presented in this essay is that LLMs are capable of achieving perfect mimesis, this time understood in the Deleuzian sense of the repetition of a creative difference. Even though this technology is still in its early stages, we can already see similar impacts on the art world. Skeptical reactions revolve around concerns that AI-driven art undermines human creativity, leading to the automation and devaluation of artistic processes. Critics argue that AI-generated works often lack the emotional depth, personal experience, and intentionality of human-created art. There are ethical concerns around authorship and intellectual property, as well as fears that mass production of AI art could flood the market and diminish the uniqueness of handmade art. Additionally, biases in AI systems, alongside the potential loss of individual artistic identity, contribute to skepticism about their growing influence in the art world.[32]

However, the historical comparison that I drew between AI art and photography suggests that the use of LLMs, or other generative AI systems, likely will lead to the emergence of new artistic forms, methods, and techniques. This progress will be further enhanced once LLMs are capable of fully dynamizing their parameter tensors and becoming true Dionysian machines. Formal ideas from traditional art genres have a significant influence on LLM-generated art today. However, we should also be alert to the possibility of the reverse influence, where the employment of LLMs leads to a modification of traditional art forms and categories, as was the case with photography. One of the key challenges facing academic aesthetics is to map these emergent forms and categories.[33]

Jakub Mácha

macha@mail.muni.cz

Jakub Mácha is a Professor of Aesthetics at Masaryk University in Brno, Czech Republic, and a researcher at the Metropolitan University Prague. He studied philosophy, art history, and computer science in Hannover and Brno. His publications focus on the philosophy of language and classical German philosophy. Mácha is the author of Wittgenstein on Internal and External Relations: Tracing All the Connections (Bloomsbury, 2015). He has also co-edited several volumes, including Wittgenstein and the Creativity of Language (Palgrave Macmillan, 2016), Wallace Stevens: Poetry, Philosophy, and Figurative Language (Peter Lang, 2018), Wittgenstein and Hegel: Reevaluation of Difference (De Gruyter, 2019), and Platonism (De Gruyter, 2024). His most recent book is The Philosophy of Exemplarity: Singularity, Particularity, and Self-Reference (Routledge, 2023).

Published on July 14, 2025.

Cite this article: Jakub Mácha, “The Mimesis of Difference: A Deleuzian Study of Generative AI in Artistic Production,” Contemporary Aesthetics, Special Volume 13 (2025), accessed date.

Endnotes

![]()

[1] Contemporary understandings of the concept of mimesis have been shaped by the work of Kendal Walton [see his Mimesis as Make-Believe (Cambridge, Massachusetts: Harvard University Press, 1990)]. His theory of mimesis emphasizes the role of imagination in how we experience and respond to art and suggests that art’s value lies in its ability to invite us into complex, shared worlds of make-believe. As ingenious as Walton’s approach is, it is difficult to apply it within the AI-focused context of the present discussion, since it operates with mental states that I do not attribute to AI systems. For a more favorable treatment of the concept of mimesis, see the recently published transcriptions of Frederic Jameson’s monumental lectures on aesthetic theory: Mimesis, Expression, Construction (London: Repeater Books, 2024). Most notably, drawing on Adorno, Jameson argues that “mimesis is this primordial activity, […] it’s both good and bad in some respects” (lecture five).

[2] Gilles Deleuze, The Logic of Sense (New York: Columbia University Press, 1990); Gilles Deleuze, Difference and Repetition (London: Continuum, 1994).

[3] Deleuze, The Logic of Sense, 263.

[4] Deleuze, Difference and Repetition, 293.

[5] Deleuze, Difference and Repetition, 293-4.

[6] The relevance of Barthes’s death of the author theory to the interpretation of AI-generated texts was recently recognized in Sofie Vlaad’s article, “Texts without Authors: Ascribing Literary Meaning in the Case of AI,” Journal of Aesthetics and Art Criticism, 83 (1):4-11 (2025). Vlaad’s key insight is that the meaning of an AI-generated text is determined by the audience.

[7] This discussion has mainly centered on texts and images. However, recent advancements in AI-driven 3-D printing technology suggest that material artifacts produced by AI may soon be a reality.

[8] Margaret A. Boden, “The Turing Test and Artistic Creativity,” Kybernetes 39 (2010): 409-13; Sarah McGregor et al., “Computational Creativity: A Philosophical Approach and an Approach to Philosophy,” 5th International Conference on Computational Creativity (ICCC) (Ljubljana, 2014); Mohammad M. al-Rifaie and Mark Bishop, “Weak and Strong Computational Creativity,” in T. R. Besold et al. (eds.), Computational Creativity Research: Towards Creative Machines (Paris: Atlantis Press, 2015), 37-49; Adam Linson, “Machine Art or Machine Artists?: Dennett, Danto, and the Expressive Stance,” in Vincent Müller (ed.), Fundamental Issues of Artificial Intelligence (Cham, Switzerland: Springer, 2016), 443-58; Arthur Miller, The Artist in the Machine: The World of AI-Powered Creativity (Cambridge, Massachusetts: MIT Press, 2019); Eva Cetinic and James She, “Understanding and Creating Art with AI: Review and Outlook,” arXiv:2102.09109 (2021); Joern Ploennigs and Markus Berger, “AI Art in Architecture,” AI in Civil Engineering 2, no. 8 (2023), doi: 10.1007/s43503-023-00018-y; Andrew Samo and Scott Highhouse, “Artificial Intelligence and Art: Identifying the Aesthetic Judgment Factors That Distinguish Human- and Machine-Generated Artwork,” Psychology of Aesthetics, Creativity, and the Arts, doi: 10.1037/aca0000570.

[9] Weiwen Chen et al., “AiArt: Towards Artificial Intelligence Art,” ThinkMind // MMEDIA 2020 (Lisbon, 2020); Mark Coeckelbergh, “The Work of Art in the Age of AI Image Generation: Aesthetics and Human-Technology Relations as Process and Performance,” Journal of Human-Technology Relations 1 (2023).

[10] Mercedes Bunz et al., “Creative AI Futures: Theory and Practice,” Proceedings of EVA London (2022).

[11] Boden, “The Turing Test and Artistic Creativity”; Mingyong Cheng, “The Creativity of Artificial Intelligence in Art,” Proceedings 81 (2022): 110; Giorgio Franceschelli and Mirco Musolesi, “On the Creativity of Large Language Models,” arXiv:2304.00008 (2023).

[12] Mark Coeckelbergh, “Can Machines Create Art?” Philosophy and Technology 30 (2017): 285-303.

[13] For comprehensive overviews, see Gokul Yenduri et al., “Generative Pre-Trained Transformer: A Comprehensive Review on Enabling Technologies, Potential Applications, Emerging Challenges, and Future Directions,” arXiv:2305.10435 (2023), doi: 10.48550/arXiv.2305.10435 or Partha Pratim Ray, “ChatGPT: A Comprehensive Review on Background, Applications, Key Challenges, Bias, Ethics, Limitations and Future Scope,” Internet of Things and Cyber-Physical Systems 3 (2023): 121-54, doi: 10.1016/j.iotcps.2023.04.003.

[14] Each row of the matrix corresponds to a unique token (the smallest meaningful units into which text is divided for analysis) in the vocabulary. The size of the vocabulary determines the number of rows in the embedding space. Each column represents a feature in the embedding space. The dimensionality of the embedding space is a parameter chosen during the training or modeling phase and determines the number of columns. When a token is input into a model that uses embeddings, the token is first mapped to its corresponding row in the embedding matrix. This row vector then serves as the feature vector for that token, encapsulating various semantic and syntactic properties.

[15] A vector is a list of numbers, a matrix is a table of numbers (rows and columns), and a tensor is a multi-dimensional generalization of a matrix.

[16] Today’s models often feature a search function, which augments responses by retrieving relevant external information. This information is then incorporated into the model’s output through context-aware generation, effectively extending its epistemic reach without modifying any learned parameters. In contrast, updating the model involves retraining or extending the original parameter tensors—typically numbering in the hundreds of

billions—that encode its internal representations and behaviors. This pretraining process modifies the core model weights using a vast corpus of new data, enabling the model to internalize updated language patterns, factual knowledge, and reasoning strategies. Fine-tuning, by comparison, adjusts a subset of these weights—often via gradient descent on a smaller, domain-specific dataset—while preserving the base model’s general-purpose capabilities. It allows the model to specialize in particular tasks or align more closely with specific applications without necessitating full-scale retraining. (This note was added during the proof stage in June 2025.)

[17] The introduction of the attention mechanism in the previous decade has paved the way for the remarkable technological advancements witnessed in current LLMs.

[18] Note that the term ‘attention’ should not be interpreted in a psychological sense; rather, it metaphorically refers to computational processes. This anthropomorphizing metaphor should not mislead us into thinking that LLMs could be conscious agents. ‘Temperature’ is likewise to be understood metaphorically.

[19] Deleuze, Difference and Repetition, 181.

[20] Deleuze, Difference and Repetition, 201.

[21] Deleuze writes: “What defines the extraordinary power of that clothed repetition more profound than bare repetition is the reprise of singularities by one another, the condensation of singularities one into another, as much in the same problem or Idea as between one problem and another or from one Idea to another.” And further: “The clothed [repetition] lies underneath the bare, and produces or excretes it as though it were the effect of its own secretion” (Deleuze, Difference and Repetition, 201 and 289).

[22] The parameters tensors are the most important static components of current language models. Other static elements, such as the programming code, also need to be made dynamic. In this discussion, I will focus on how the parameter tensors could be made dynamic, which appears to be the most urgent issue.

[23] Deleuze, Difference and Repetition, 292.

[24] Source: https://www.flickr.com/photos/rocor/47080722114. Photo: Rob Coder, reprinted under CC BY-NC 2.0.

[25] Deleuze, Difference and Repetition, 294.

[26] Deleuze, Difference and Repetition, 144 and 200.

[27] Deleuze, Difference and Repetition, 283.

[28] It has been suggested that quantum computers could enable real-time integration and adaptation of training data. See Zhiding Liang et al., “Unleashing the Potential of LLMs for Quantum Computing: A Study in Quantum Architecture Design,” arXiv:2307.08191 (2023).

[29] Source: http://annaridler.com/synthetic-iris-dataset-2023. Photo: Simon Vogel. Courtesy: The artist and Galerie Nagel Draxler, Berlin/Cologne.

[30] The tool analogy is not the only one that has recently been used to explain the role of AI systems in artistic production. Young and Terrone suggest that certain AI systems should be viewed as a new type of artistic medium. In contrast, Anscomb suggests that AI systems can be seen as collaborators in the creative process, while Cross views AI systems as more active participants in art practice. Each of these viewpoints underscores a critical assumption: that human agency remains an integral part of the artistic endeavor. Cf. Nick Young and Enrico Terrone, “Growing the image: Generative AI and the medium of gardening,” Philosophical Quarterly (2024), doi: 10.1093/pq/pqae120. Claire Anscomb, “AI: artistic collaborator?” AI and Society (2024): 1-11, doi: 10.1007/s00146-024-02083-y. Anthony Cross, “Tool, Collaborator, or Participant: AI and Artistic Agency,” British Journal of Aesthetics (forthcoming). Jakub Mácha, “Framing the Unframable: Why AI Art Is a Battle of Metaphors,” AI & Society (2025), doi: 10.1007/s00146-025-02343-5.

[31] See Aaron Scharf, Art and Photography (London: Penguin, 1968).

[32] See Casey Watters and Michal K. Lemanski, “Universal Skepticism of ChatGPT: A Review of Early Literature on Chat Generative Pre-Trained Transformer,” Frontiers in Big Data 6, (2023), doi: 10.3389/fdata.2023.1224976, and Chinmay Shripad Kulkarni, “Ethical Implications of Large Language Models in Content Generation,” Journal of Artificial Intelligence, Machine Learning and Data Science 1, no. 1 (2022): 62-7, doi: 10.51219/JAIMLD/chinmay-shripad-kulkarni/32.

[33] I would like to express my gratitude to the three anonymous referees and the guest editors for their valuable feedback, which significantly enhanced this article. I would also like to thank the audience at the 10th Dubrovnik Conference on the Philosophy of Art 2024. This work was supported by the Czech Science Foundation, project number 23-06827S.